|

|

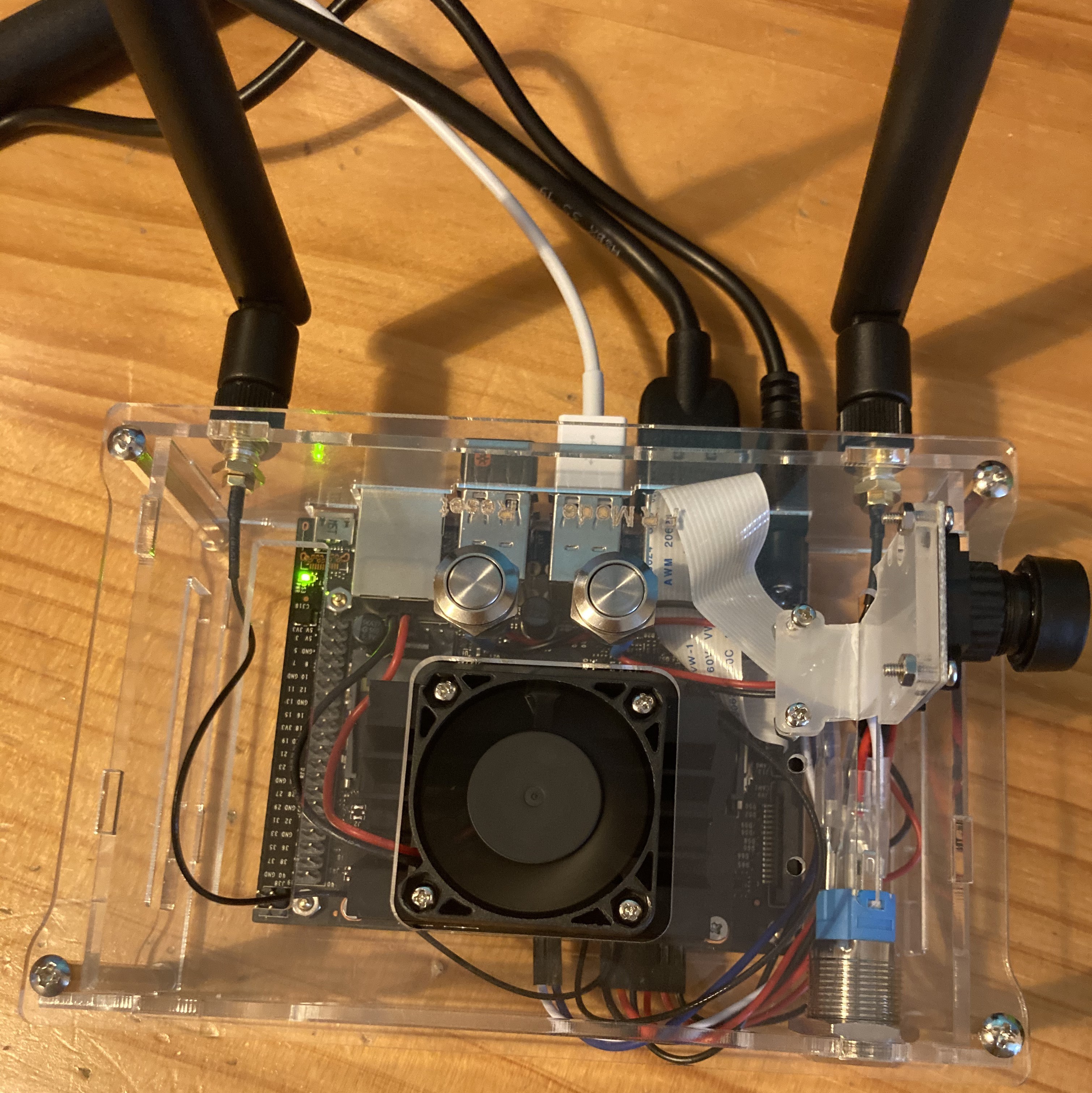

The final result of my PC build. |

Early Encounters with Computer HardwareThe first PC I recall encountering was an iMac G3. I remember the day my parents walked through our front door with one of these clunky machines and placed it in our front living room. While I was intrigued by the machine itself, most of my time with the iMac G3 was spent playing with the weighted rubber ball within the mechanical mouse rather than interacting with the computer. A few years later and my interest in PCs progressed from playing with the mouse to using the PC itself for basic tasks such as creating documents, playing games and writing basic programs. Throughout these early years the inner workings of the PC remained a mystery to me, it wasn't until my mid/late teens that this mystery began to unravel itself. An early formative experience with computer hardware came from an internship I performed at EMC (now known as Dell EMC). Each morning during the internship my friend Patrick and I would be dropped off by our parents to the factory floor where one of the engineers would be tasked with supervising us for the day. The day usually started with a grinning engineer leading us towards a server room that contained an impressive mass of entagled cables, our role was to perform cable management. After some days of laboring and laughing at the mess we had found before us in the server room we earned enough respect from the engineering team to be allowed hand them tools while soldering and working on machines on the factory floor. This led to conversations about storage systems, servers and virtualization with engineers on the factory floor but also other engineers who happened to pass through. By the end of this internship, I had a basic understanding of the components of a server, storage devices and a very vague understanding of virtualization. This internship was followed by additional experiences including assisting in setting up computers in highschool, working with Arduinos, Nvidia developer kits and eventually building my own PC. While I still wouldn't consider myself a hardware expert by any stretch of the imagination I do have some experience with computer hardware. In this article, I share some of the details of my experience in building my first PC along with some tips and info to help anyone who is thinking of building their own. |

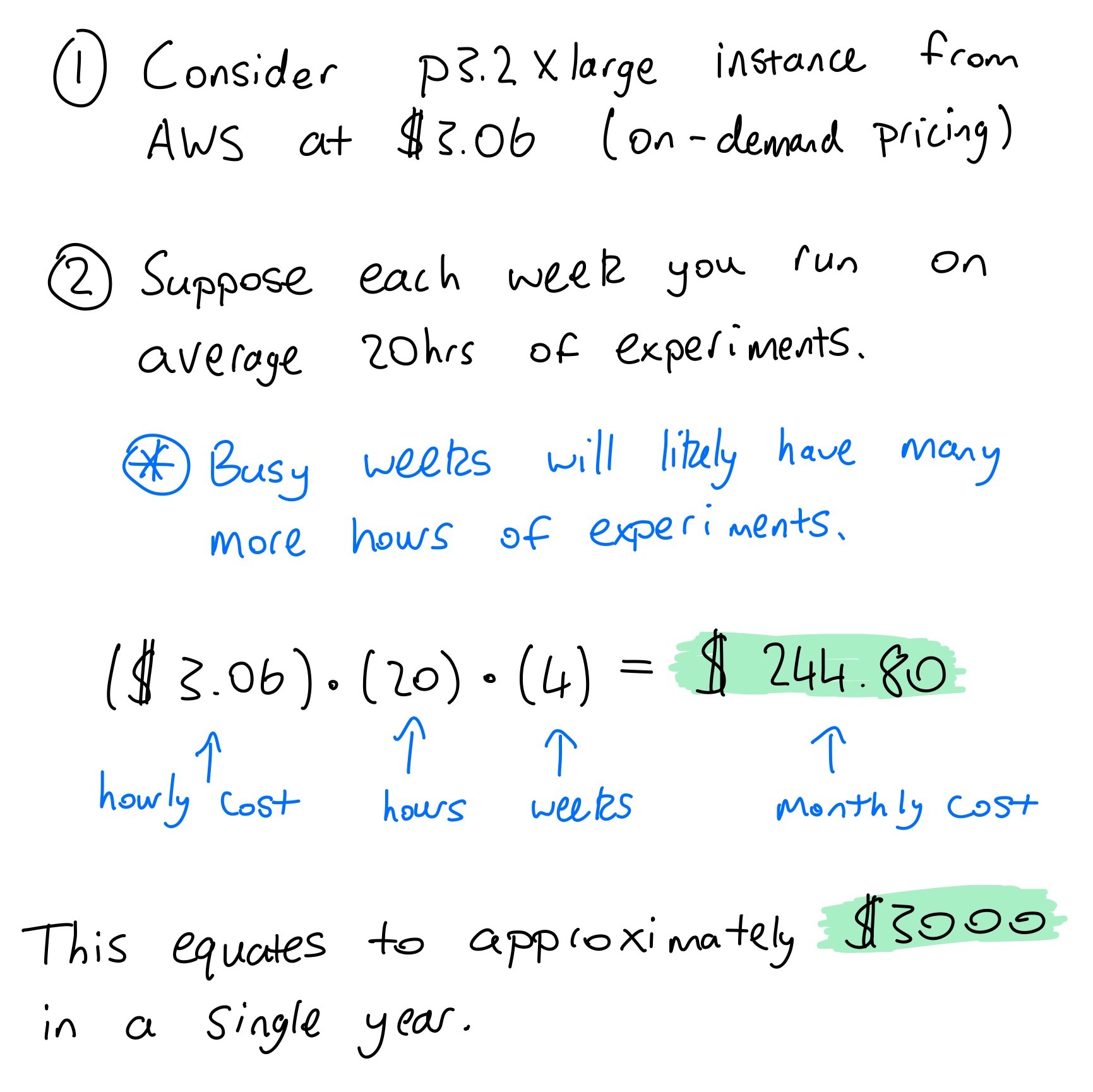

Overhead shot of an Nvidia Jetson Nano I built for a past project. |

The Dawn of Deep LearningAs the title of this article suggests this isn't a story of building any kind of PC but rather a 'Deep Learning' PC. The 'Deep' aspect comes from the fact that this PC is to be used to train deep artificial neural networks, which require specialized hardware. This hardware is especially important to the story and future of deep learning. While we appear to be admist the dawn of deep learning with companies like Tesla, Waymo and Cruise working on challenges like full self driving and the development of advanced robot technologies much of the core underlying theory powering these advancements were constructed as early as the 20th century. The concept of neural networks has been around for quite some time, early literature on neural networks dates back to 1943. The backpropagation learning algorithm soon followed in 1975, but it took until the late 20th century and early 21st century before neural networks became truly effective for many real-world applications. One may ask what is the main reason for this delay? There are a number of contributing factors, including lack of investment in research during so-called 'AI winters', however, the factor of hardware is often touted as one of the more important contributing factors. In fact without appropriate hardware it is not practical to run experiments with networks that are sufficiently deep to yield many of the impressive results we see to day. The need for advanced hardware comes from the training of neural networks via backpropagation which is a computationally expensive algorithm that requires the parallel compute capabilities of hardware accelerators such as GPUs and TPUs; this hardware wasn't as advanced or readily available in the mid/late 20th century as it is today (ironically the same hardware hasn't been as readily available as usual in recent times due to global chip shortages). Graphics processing units also more commonly referred to as GPUs helped power the recent wave of advances in deep learning. GPUs are specialized processors that were initially created to accelerate graphics rendering. Both deep learning and graphics rendering share the property that they both rely on a large number of matrix operations which need to be computed efficiently, this is exactly what GPUs are designed to do through enabling parallel computations across many cores. It is worth mentioning that there are other hardware accelerators such as Tensor processing units (TPUs) which were created by Google specifically for the purpose of training neural networks. This article however will from hence forth focus on GPUs as these are commercially available to consumers. While advancement in GPU technology have helped enable the dawn of deep learning it also marked the creation of a new kind of PC, the deep learning PC. In the paragraphs to come we are going to discuss the components of such a PC and the steps you can take to construct one for yourself. |

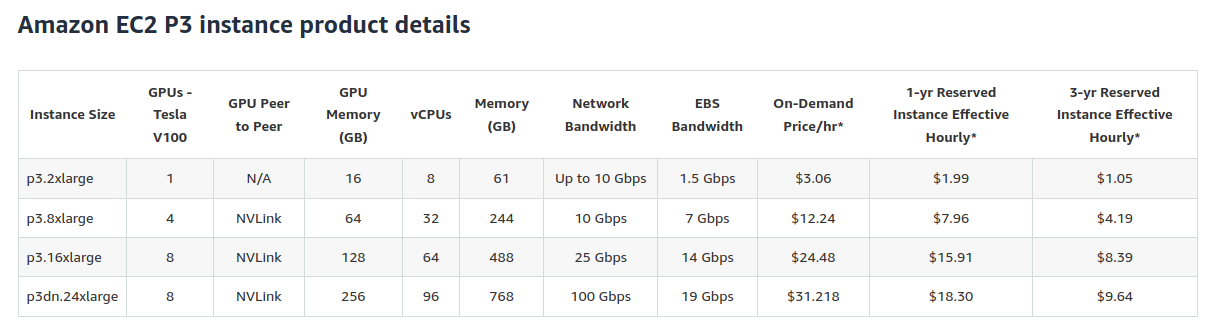

Why Build a Deep Learning PC?Before diving into my experience of building a deep learning PC it is worth asking why would one choose to build a deep learning PC in the first place? The obvious answer is to train deep neural networks but this overlooks the cost implications of owning a deep learning PC and the other available options such as renting. In my case, it made sense to invest in building my own PC as renting instances from cloud providers was proving less cost effective than investing in my own machine. A quick back of the envelope calculation using Amazon Web Services (AWS) pricing demonstrates why this is the case: |

|

So a very conservative estimate for a deep learning researcher performing on average 20 hours of small experiments (fits into memory of single GPU) per week will cost about $3000 a year. A deep learning PC for the same price will likely have higher specs than the p3.2xlarge instance used in the above example, can be used to run experiments anytime and will actually be owned by the researcher who may use and upgrade the machine for years to come. While there are other costs associated to maintaining a deep learning PC such as the power needed to run the machine, it still remains more cost effective for power users?. For many students however this is not feasible as was the case for me when I first completed my undergraduate career. I can empathise with this struggle for compute (and frankly I still experience such a struggle when I require GPUs for larger experiments) so for those who are learning on a budget I wish to highlight the following resources which I used regularly before investing in my own deep learning PC:

I'd be remissed if I didn't also speak of the cons of owning a local machine. The most significant con from my experience is the noise generated by the fans of my PC. While this is not an issue during the day as the noise really isn't that loud, it does become an issue when I wish to train a model overnight. In this regard running experiments on rented hardware is a lot nicer as you can skip the noise generated by the PC's fans. Another con is the inherit liability of owning your own hardware, while most hardware will be under a warranty from the manufacturer, if something does malfunction or break you are responsible for fixing this issue in contrast rented hardware is maintained by the provider. While not so common, it is still possible to break expensive hardware when assembling your machine, if you somehow happen to break components of your PC you have very little recourse unless the component was faulty when you originally received it. Expensive mistakes are possible and this liability is a potential con of owning a local machine but the good news is that it is very unlikely to be an issue for most people, especially if you have done your due diligence and taken all necessary precautions when building/maintaining your PC (some of which will be discussed below). |

Wizards of Computer HardwareBefore progressing further, there are a number of individuals who inspired my own PC build whom have already shared some of their wisdom with the broader online community. I wish to pay special thanks to the following people and groups:

|

Gathering ComponentsThe first step I took in the journey of building a deep learning PC was to decide on a budget for my build and the minimum performance requirements of the system I wished to build. Building PCs can get expensive very quickly so it is worth defining a budget from the outset to ensure you don't overspend. In my case, I knew that I wished to train deep reinforcement learning algorithms to solve simulated robot manipulation tasks, as a result some of the high-level performance requirements I had included:

Once I had understood my system requirements I went about researching the various options for components that satisfied these requirements. Below I outline, some of the points I considered when ordering parts some of which you may also want to consider when building your own PC. It is also worth mentioning that I made use of pcpartpicker to check the compatibility of components which was immensely useful. You can find the components for part of my build at the following link CPU

The CPU is generally less significant when it comes to deep learning performance as the computations associated with training neural networks are processed on the GPU. For my purposes however, I needed a powerful CPU to run CPU powered robot simulation environments that could be used for deep reinforcement learning experiments. For reference I currently use the MuJoCo physics engine with the Robosuite framework. As a result I choose an AMD CPU with 12 cores and a total of 24 threads. For those not necessarily simulating environment physics using the system CPU or running any other heavy CPU dependent programs it is likely possible to save money by purchasing a CPU that has sufficient power to support your GPU as anything above this provides little benefit if you are only training deep neural networks. CPU Cooler

I spent a while debating whether I wanted liquid or air cooling. In general, liquid cooling is more effective but also comes at a premium. For most setups I believe air cooling to be sufficient however in my case I choose liquid cooling since I knew I was going to be installing a large graphics card that would likely generate quite a lot of heat so I took extra precautions in order to keep my system from overheating by selecting to liquid cool the CPU. A nice added benefit is that the liquid cooling solution can be aesthetically pleasing, also be sure to invest in a reputable liquid cooler as there are horror stories of some liquid coolers leaking and destroying components. Motherboard

The choice of motherboard should align with the components you wish to use as part of your PC. The form factor I choose was ATX which is relatively standard and supported the components I wished to add. One thing I would bear in mind when purchasing a motherboard is whether you will need to update the BIOS and whether the Motherboard supports usb bios flashback. If your motherboard does require a BIOS update to support your CPU of choice and it doesn't have the option of usb BIOS flashback, then you will have to source a supported CPU to perform the BIOS update which is less than ideal. You may also wish to consider whether the motherboard has built in WIFI and bluetooth or not. Another consideration when choosing your motherboard is whether you wish to expand your PC overtime with say mulitple GPUs. You may wish to consider a larger motherboard form factor if you plan on doing so. Memory

When it comes to RAM I choose to get 64GB in total. The reason for this being that I wished to have sufficient RAM in order to perform efficient data preprocessing. A good rule of thumb for the minimum amount of RAM you require is at least enough RAM to match the memory of your largest GPU. Storage

I choose to purchase both a solid state drive and a hard drive for storage. The SSD enables fast booting of my system and its applications and provides overall a more productive environment for developing code. Having a backup hard drive for long term storage and large datasets is something I have also found useful. As an example parts of the Waymo Open Dataset which I used in a past project are stored on my hard drive. GPU

The GPU you choose is a key decision in the design of your deep learning PC. At the time of writing this article, Nvidia essentially has a monopoly on the GPU market for deep learning. This is in large part due to the community adoption of Nvidia libraries that are compatible with the most popular deep learning frameworks. Given you are likely to buy an Nvidia GPU it may be worthwhile avoiding RTX founder edition cards as they have been reported to have some cooling issues. PSU

Power supply units (PSUs) are rated by their power capacity (e.g. 1000 watts) and the efficiency with which this power is outputted. For example, if the efficiency of the PSU is 50% and its capacity is 1000W it will require 2000W from the socket in order to output its max capacity. A good standard to expect is an efficiency rating of 80 plus, with this efficiency rating the other consideration is whether the PSU has the power capacity required to power the components you have chosen. CaseThe main consideration when choosing a case is how much RGB comes built in with the case :). Ok actually not quite, in order to maintain the PC hardware above and have it running at peak peformance, cooling and motherboard compatibility are the main areas of concern. For the second point, your case must support the motherboard you have chosen as otherwise it will be rendered essentially useless for your purposes, case providers should provide specs on the supported motherboard sizes. Your motherboard size will in part contrain the PC case sizes you can consider, in general they come in three sizes:

When it comes to cooling, you'll want to check how many fans the case has and how these are orientated. In addition, the size of the case is important, if components are crammed tightly together your system may be prone to overheating more readily, this is especially a concern for systems that contain multiple GPUs. My choice: NZXT H510i (since it looks sleek and does satisfy my base requirements) |

Assembling Components |

|

Assembling a deep learning PC once you have gathered all the correct components is similar to assembling lego except it involves wires, expensive components and the need to take precautions to protect these components. When all the items for building the PC arrive you will likely notice that each component (except for the case) is wrapped in an anti-static bag. This does exactly what the name says prevents static build up, why is this such an issue? Electronic devices such as the components of your PC were built to conduct electrical current in a controlled fashion through the devices wires and circuits. Static build up can result in uncontrolled electical current running through your device which can harm its functionality. In order to prevent static build up don't go running on any carpets before you begin your build :). More importantly be sure to earth yourself before you begin and also consider getting a grounding wrist strap. The assembly portion of the build is somewhat dependent on the case and motherboard you choose but here are some tips you may also find useful,

|

Churning Tensors (and environmental considerations) |

The moment the PC turns on for the first time is super cool, seeing it train models quickly is even cooler. |

|

Eventually you will have a cool looking PC on your desk ready to churn through tensors. If you are looking to fast track your deep learning journey having such a machine at your disposal will be of great benefit. It is also worth highlighting that training deep neural networks isn't the most eco friendly activity out there. This is an important point as many more people begin to train these sorts of models the environmental impact should be considered. You will next need to configure your computer software once the PC build is complete, if you'd like an idea of the software setup I use feel free to leave a comment below. Furthermore, if you have any questions or you enjoyed this article feel free to leave a comment below. Later this year I will be including a guide on assembling and operating up a real-life robot arm so stay tuned for this and deep learning experiments I run on this robot arm. Thank you for stopping by my article and have a great rest of the day :). |