Peter David Fagan

Email | Google Scholar | GitHub | Blog

I’m building Corca Health, an AI-assisted ADHD screening platform designed to support GPs with early detection and triage.

My broader work focuses on preventative medicine, secure health data systems, and safe, reliable AI for clinical use. I have a background in mathematics and computer science, with a focus on artificial intelligence.

Outside of work, I enjoy CrossFit, sea swimming, and hiking.

First-Author Publications

Toward a Physical Theory of Intelligence

Peter David Fagan

Preprint (v1), 2025

We develop a physical theory of intelligence grounded in irreversible information processing and physical work; we define intelligence as the efficiency with which a system irreversibly processes information to perform goal-directed work.

This manuscript presents an attempt at defining a physical theory of intelligence. Several results are conjectural, and aspects such as empirical validation, extended proofs, and architectural implications will be developed further in subsequent versions. The broader aim is to ground intelligence in physical law in order to support the safe development of artificial intelligence.

Keyed Chaotic Dynamics For Privacy-Preserving Neural Inference

Peter David Fagan

Preprint, 2025

We introduce a framework for applying keyed chaotic dynamical systems to encrypt and decrypt tensors in machine learning pipelines. This lightweight, deterministic approach enables authenticated inference without modifying model architectures or requiring retraining. Designed for privacy-first AI, this method provides a new building block at the intersection of cryptography, dynamical systems, and neural computation.

Learning from Demonstration with Implicit Nonlinear Dynamics Models

Peter David Fagan, Subramanian Ramamoorthy

Preprint, 2024

We introduce a new recurrent neural network layer that incorporates fixed nonlinear dynamics models where the dynamics satisfy the Echo State Property. We show that this neural network layer is well suited to the task of overcoming compounding errors under the learning from demonstration paradigm. Through evaluating neural network architectures with/without our layer on the task of reproducing human handwriting traces we show that the introduced neural network layer improves task precision and robustness to perturbations all while maintaining a low computational overhead.

Collaborative Publications

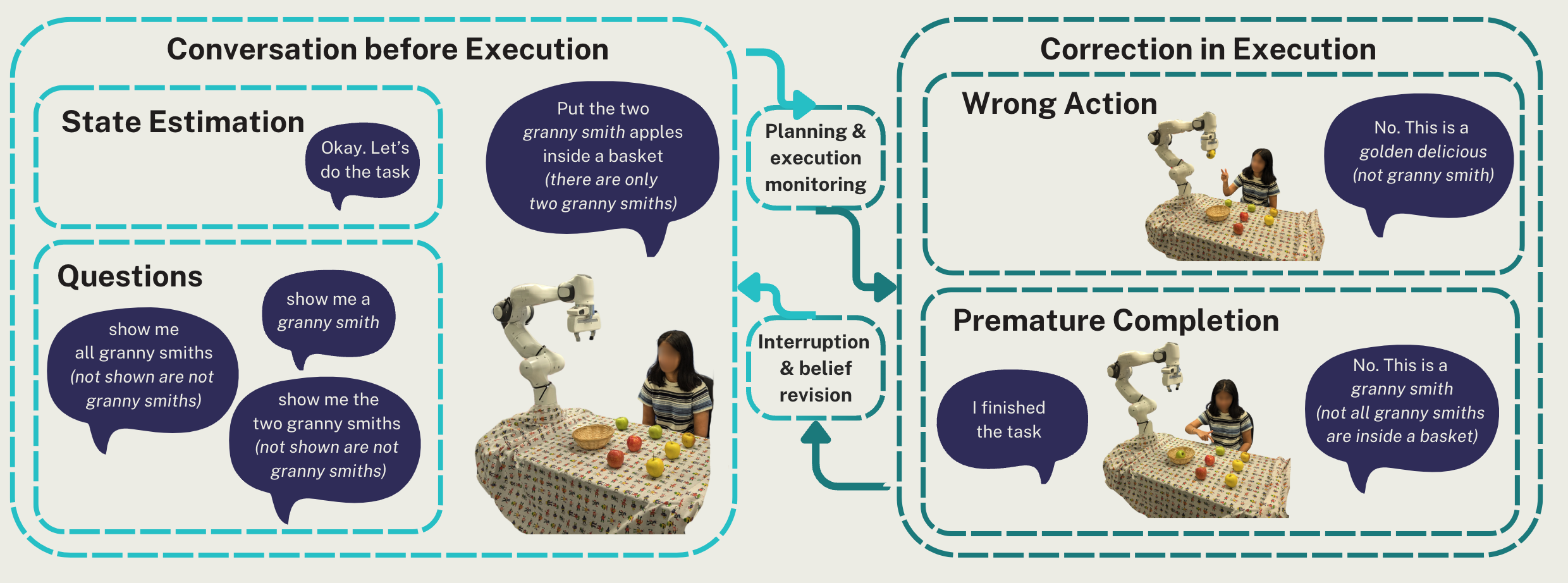

SECURE: SEMANTICS-AWARE EMBODIED CONVERSATION UNDER UNAWARENESS FOR LIFELONG ROBOT LEARNING

Rimvydas Rubavicius, Peter David Fagan, Alex Lascarides, Subramanian Ramamoorthy

Preprint, 2025

In this work, we introduce an interactive task learning framework to cope with unforeseen possibilities by exploiting the formal semantic analysis of embodied conversation.

DROID: A Large-Scale In-the-Wild Robot Manipulation Dataset

The DROID Dataset Team

Robotics: Science and Systems (R:SS), 2024

In this work, we introduce DROID (Distributed Robot Interaction Dataset), a diverse robot manipulation dataset comprising 76k demonstration trajectories or 350 hours of interaction data, collected across 564 scenes and 86 tasks by 50 data collectors in North America, Asia, and Europe over the course of 12 months.

Open X-Embodiment: Robotic Learning Datasets and RT-X Models

Open X-Embodiment Team

IEEE International Conference on Robotics and Automation (ICRA), May 2024

In this work, we introduce Open X-Embodiment, a comprehensive collection of robotic learning datasets and RT-X models. These datasets and models facilitate research in embodied AI by providing large-scale, diverse, and realistic environments for training robotic systems. The datasets cover a wide range of tasks and scenarios, enabling robots to learn complex behaviors through interaction with their environment.

Software

MoveIt 2 Python Library

Peter David Fagan

Google Summer of Code, 2022

This is the official Python binding for the MoveIt 2 library.